You’ve poured your heart and soul into your website, and it’s lost in the search engine results. How frustrating is that? It’s like hosting a party, and nobody shows up. You have the best content but without performing a technical SEO audit it’s like finding a needle in a haystack.

A technical SEO audit is your guide through the algorithm maze and site architecture. It will uncover the hidden issues that are holding your website back, from slow load times to broken links. You’ll learn how to optimise your site so it stands out in search results.

Get into the techy stuff, and you’ll not only get more visibility but also a better experience for your visitors. Keep reading and I’ll show you how to turn your website into a traffic machine

What is a Technical SEO Audit?

A technical site audit gives you insights into a website’s performance, a way to get more visibility in search results.

This process lets you examine a website's backend to find issues that are holding it back. These include site speed, mobile responsiveness, and URL structure.

You can think of it as a website health check—without this regular check-up, small issues can become big problems that affect traffic and user experience.

When it comes to running a technical SEO audit, timing is everything. Conduct one after big changes, like a redesign or new content management system.

Regular audits, ideally quarterly, will keep your site healthy. If you see a drop in traffic or rankings, an audit is the first step to diagnose the problem. Proactivity lets you prevent issues before they affect user experience and visibility.

Preparation

Preparation is key to a successful technical SEO audit. Do the right things and you’ll get a thorough review of your website’s technical foundation.

Set Up Analytics and Search Engine Properties

First, you must install Google Analytics (GA) and Google Search Console (GSC) immediately after setting up your website.

Both track user behaviour and website performance so you can find issues that can affect your rankings.

This will give you access to valuable data like search queries, impressions, and click-through rates, but we'll deal with these tools later.

Site Crawl

Run a site crawl using tools like Screaming Frog or Sitebulb to find technical issues.

These SEO audit tools will give you a complete overview of your site, showing broken links, duplicate pages, duplicate content, and metadata issues. Fixing these will improve your site’s crawlability and user experience.

Most of the best audit tools aren't free, however. So if you want to perform a technical SEO audit on your website and generate actionable insights, you should be able to shell out cash to use these premium tools.

However, Ahrefs Webmaster Tools (AWT) is a more than capable free audit software that can help you analyse your site for its technical components. Ensure you have added your site to your GSC so you can use AWT to review your site pages.

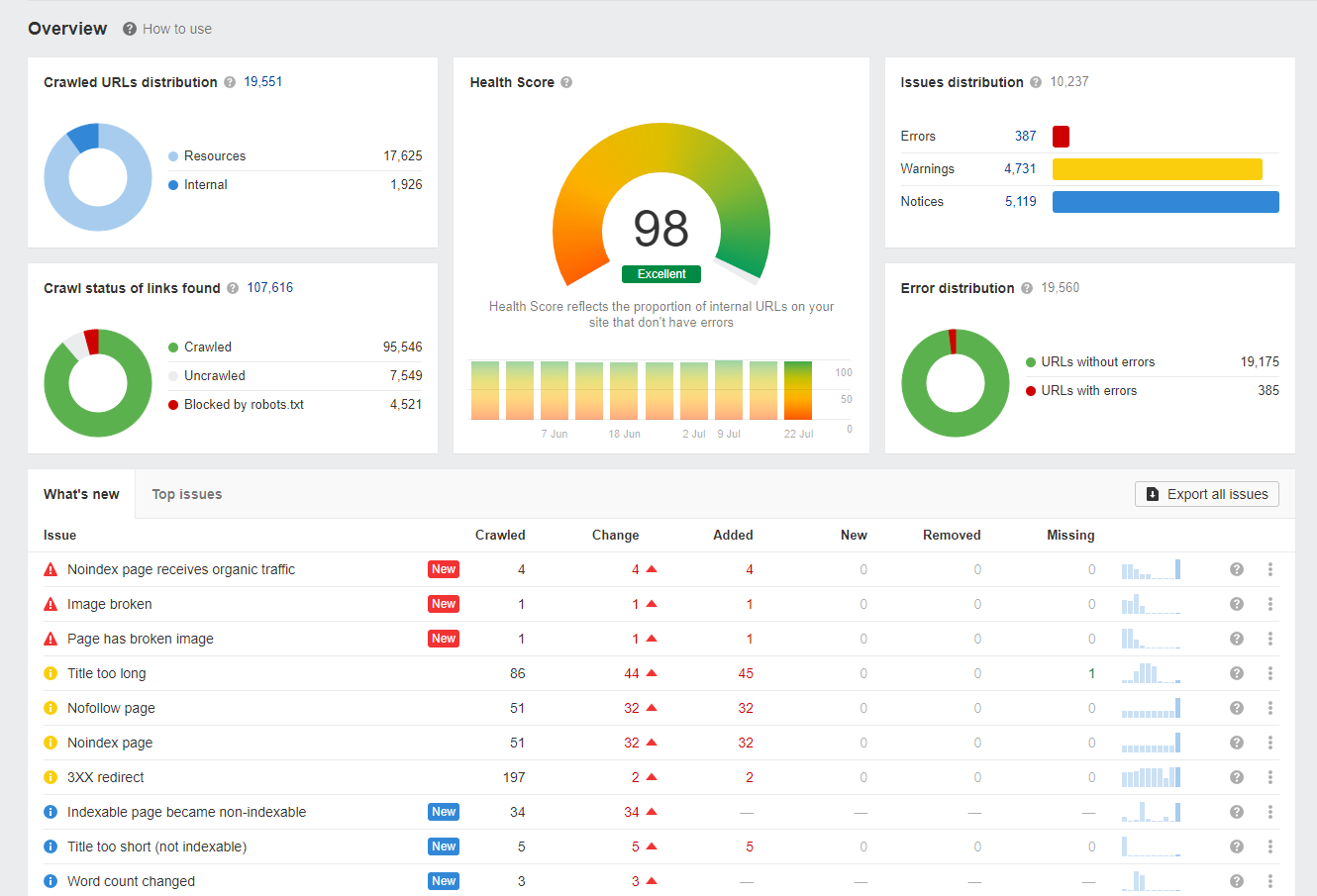

After running a technical SEO audit, which could take hours (depending on how many pages your site has), you should see your site's audit score on top of the errors and warnings the tool will have found.

Technical SEO audit process

Once the audit results are in, it's time for the fun part! You should have access to errors that are holding search engines from crawling and indexing your site properly. Below is a breakdown of the different issues your audit tool will have found and how to resolve them properly.

For this post, we'll primarily look at how you can spot and fix these issues using Ahrefs Webmaster Tools due to its accessibility and comprehensive report. If the factor isn't available in AWT, we'll look at other alternatives you can use. Let's begin!

Crawling and Indexing

One of the first steps in this process is to check your entire site’s indexation. A deep dive into your index status will show you if any important pages are missing from search results so you can fix it and improve your online presence.

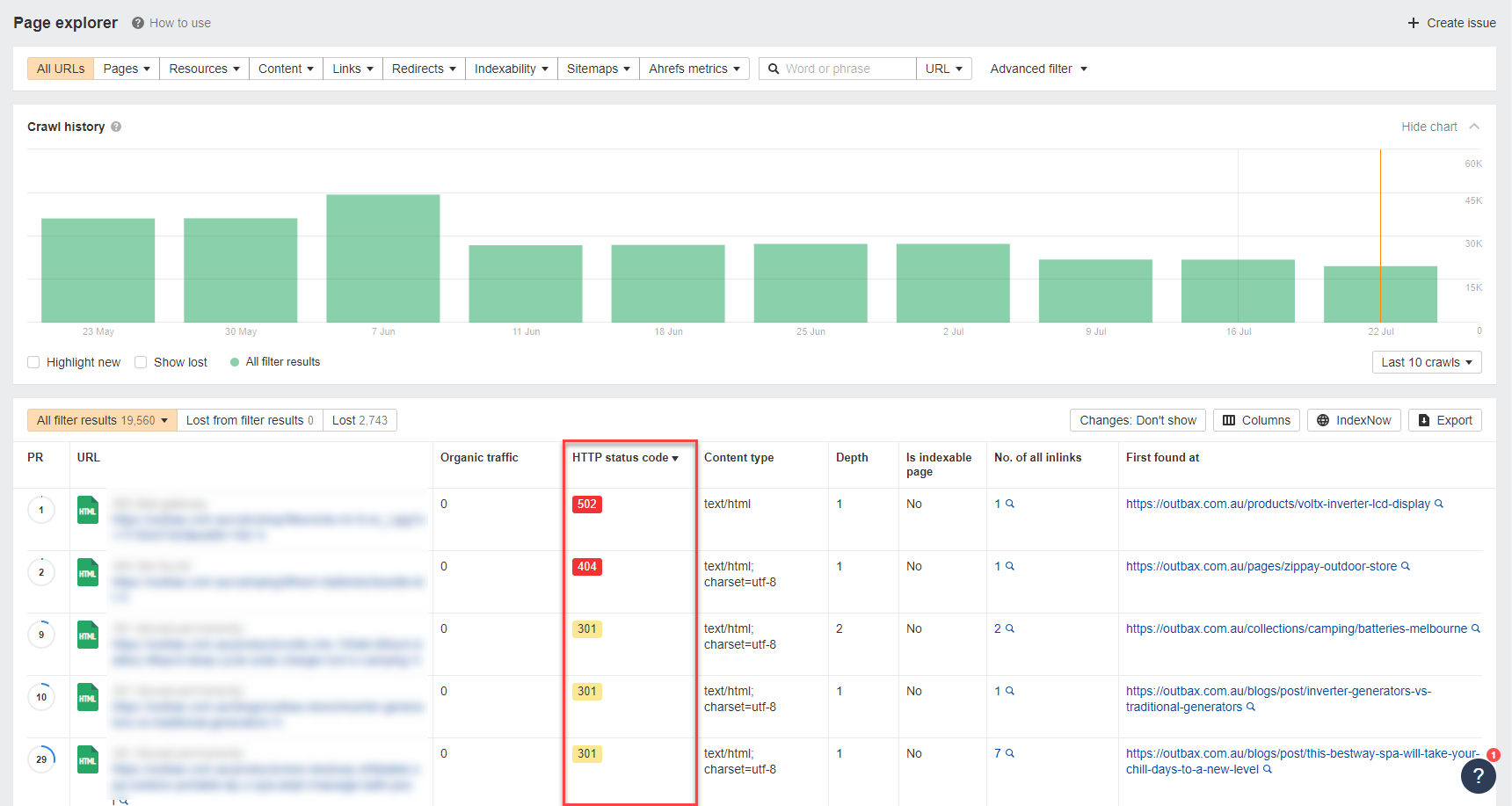

Using AWT, go to Tools > Page Explorer to see the list of pages the tool found on your site during the audit. From here, you can see the HTTP Status Code of each page, which tells you which ones search engines can crawl and show on search results.

Here's a concise list of common HTTP status codes described in SEO terms:

-

200 OK: Ideal for SEO, indicating successful page retrieval.

-

301 Moved Permanently: Crucial for maintaining SEO value when redirecting pages.

-

302 Found: Temporary redirect that doesn't pass SEO value.

-

304 Not Modified: Efficient for caching, reducing server load without SEO impact.

-

400 Bad Request: Indicates client error, potentially harming user experience and SEO.

-

401 Unauthorized: May prevent search engine crawlers from accessing content.

-

403 Forbidden: Can block search engines from indexing important pages.

-

404 Not Found: Negatively impacts SEO if common, signaling poor user experience.

-

500 Internal Server Error: Severe issue that can harm SEO if persistent.

-

503 Service Unavailable: Temporary unavailability, less damaging to SEO than 404 if brief.

Analyse which among the pages should be crawled and not crawled by Google based on the results you find here.

To help you analyse why your site pages have these status codes, you can look at the following factors:

Robots.txt

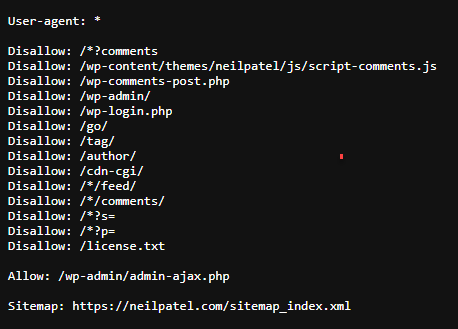

Your site’s robots.txt file which is a guide for search engines on which pages to crawl and which to ignore.

Here's a screenshot of a robots.txt file:

Basically, make sure important pages are not accidentally blocked from crawling as this can severely impact your website’s performance in search engine results.

At the same time, ensure that non-important pages (login, user profiles, etc.) are set to noindex in your robots.text.

Also, review robots meta tags and X-robots-tag so you can control content indexing on a page-by-page basis. These will give you more flexibility on how search engines will treat certain type of content. These will all help to have a clear path for search engines to follow and will improve your site’s visibility.

You can set up your robots.txt by creating a file on notepad following this format:

User-agent: *

Disallow: /admin/

Disallow: /cgi-bin/

Disallow: /tmp/

Disallow: /private/

User-agent: Googlebot

Allow: /articles/

Disallow: /search

Sitemap: https://www.example.com/sitemap.xml

User-agent: Bingbot

Crawl-delay: 10

User-agent: Badbot

Disallow: /The robots.txt file follows a specific format and structure to communicate crawling instructions to search engine bots effectively. It's a plain text file where each directive starts on a new line.

Comments can be added using lines that begin with '#', though these are not shown in the example above.

The file uses 'User-agent:' to specify which bot the subsequent rules apply to, with an asterisk (*) serving as a wildcard to indicate all robots.

'Disallow:' and 'Allow:' directives indicate which directories or pages should not be crawled or are explicitly permitted, respectively.

The 'Sitemap:' directive points to the location of the sitemap file, while 'Crawl-delay:' suggests a delay in seconds between consecutive requests from a bot.

Importantly, specific bots can have their own set of rules, allowing for customized crawling instructions for different search engines or bot types.

After creating this file, you can upload it to your site's root directory.

Sitemaps

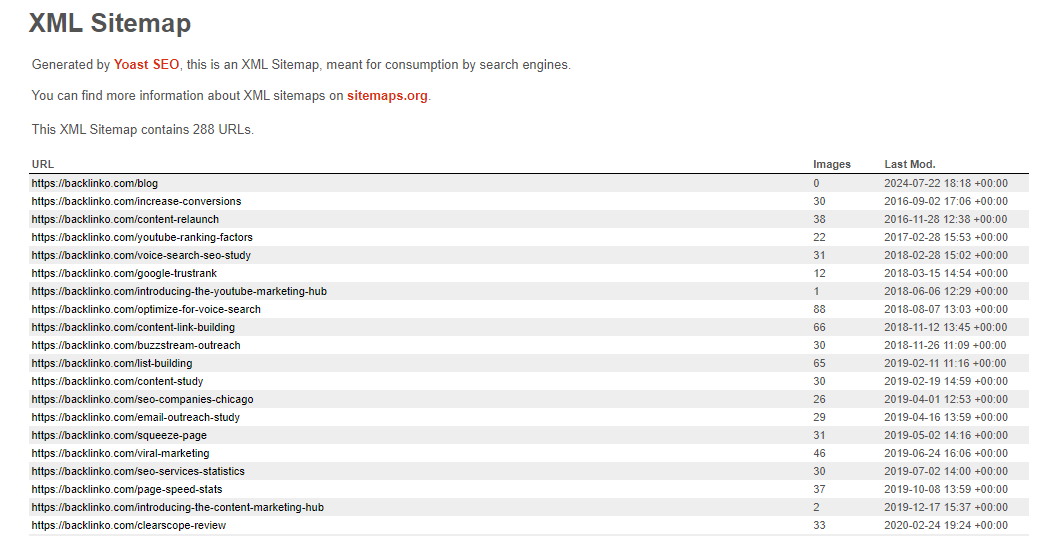

XML sitemaps list down the page available for crawling on your site.

This is advantageous as it helps search spiders find the most important pages on your site that needs crawling. This will improve site discoverability and allocate a crawl budget, which is how many pages Google will crawl in a given timeframe.

While spiders can still crawl pages that don't appear on XML sitemaps, it'll take them longer to find them as opposed to having them immediately available.

Here's an example of what a sitemap looks like:

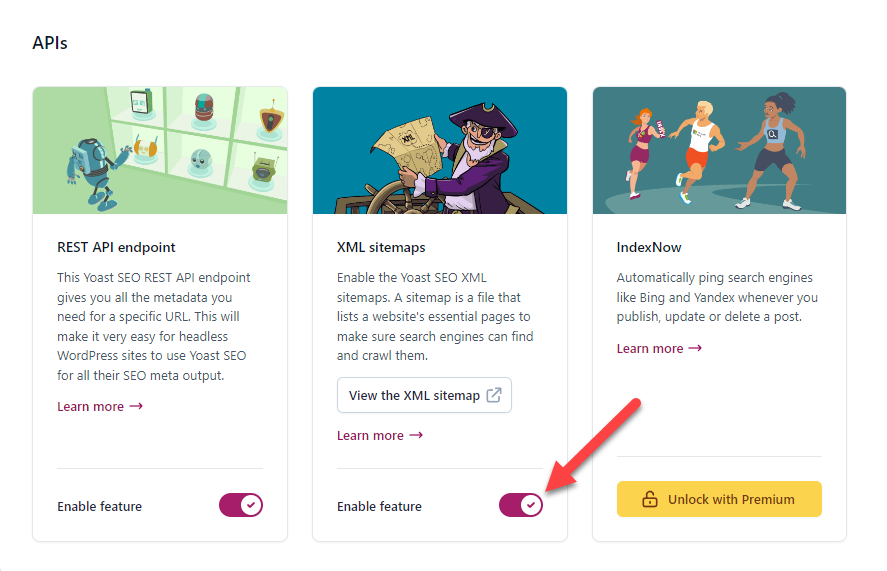

To set up your site's sitemaps, you can use Yoast SEO or any SEO plugin on WordPress with sitemaps functionality.

Enable the XML Sitemap feature in Yoast's Settings page and the plugin will automatically create one for you. It'll also add new pages into the sitemap upon publishing them,

By checking your sitemap is up-to-date and accurate to your site’s structure you’ll make sure important content is presented to search engines in a clear way and increase your chances of better rankings.

Crawl Depth

Lastly, internal linking is important to optimize user navigation and search engine crawling. Internal links will create pathways between pages so search engines can fully explore your site’s content and guide users to related topics. This seamless navigation will not only increase dwell time of users but also spread authority and ranking potential across your site.

By placing internal links strategically you can highlight your most important pages so they can get the attention they need for search engine performance. Implementing these in a technical SEO audit will contribute greatly to your website’s overall ability to attract and retain organic traffic.

URL Structure and Canonicalization

When doing a technical SEO audit, the URL structure and meta descriptions of the website are important to ensure they are clear and friendly, as they are factors in user experience and overall search engine ranking.

A clean URL is one that is simple, easy to read and indicates what content the user will get when they click through. For example instead of a long URL with many parameters and unnecessary characters a well structured URL would show the website hierarchy like “www.example.com/products/category/product-name”.

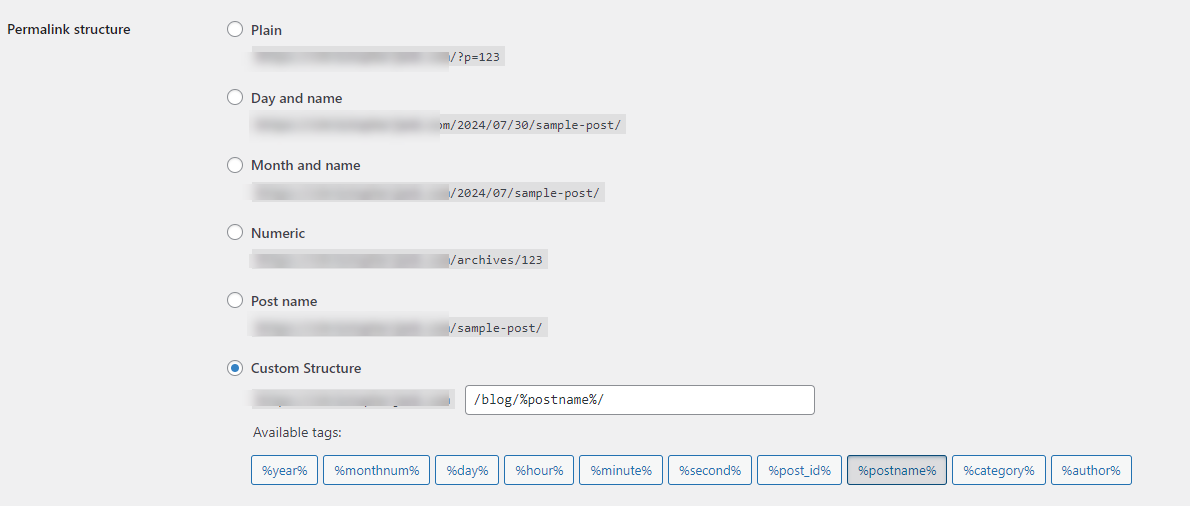

If you're running your site on WordPress, you can first change the URL structure in the Settings > Permalinks. Choose Post name so all pages and posts will show the name of the slug as their URLs. You can also select Custom Structure if you want a structure the URL in a specific way.

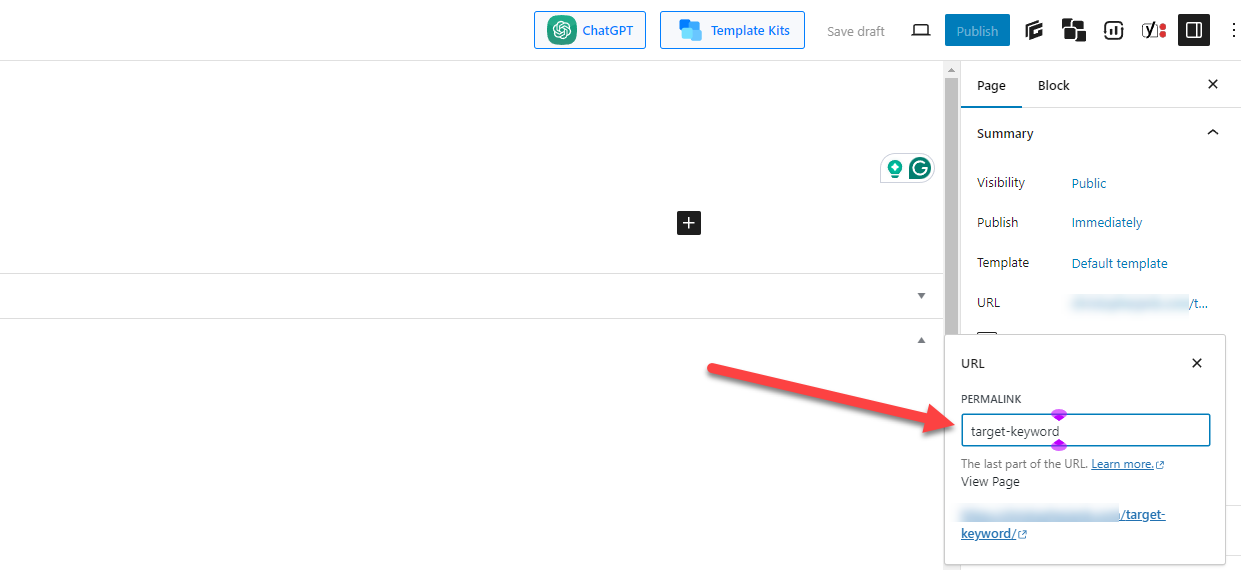

On the page level, you can change the URL to make it shorter and feature its target keyword.

This will also help users to understand the page content and make it easier for search engines to crawl and index.

Canonical Tags

Also canonical tags must be implemented correctly to prevent duplicate content issues which can severely impact a site’s performance.

During the audit make sure to check that canonical tags are not sending mixed signals especially on indexation. Discrepancies between canonical URLs and indexed pages can cause indexing issues which means pages that should rank are not. Checking and fixing these mixed signals regularly will allow website owners to maintain their authority in search rankings.

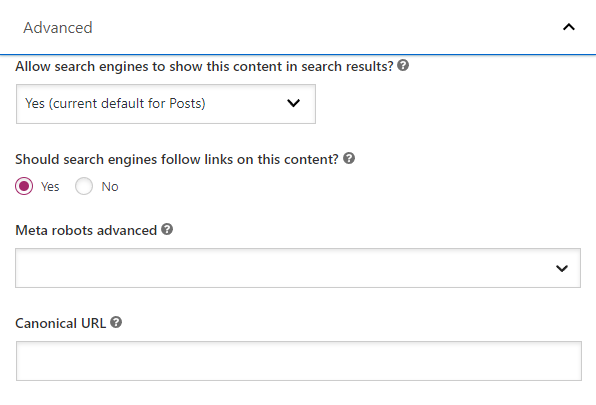

You can assign canonical tags on your page using a plugin like Yoast SEO. Click on the Advanced tab on the page you want to edit and enter your desired canonical URL for that page.

Duplicate Content

Besides canonicalization, duplicate content must be addressed as having multiple pages with same content can dilute the ranking potential of a website.

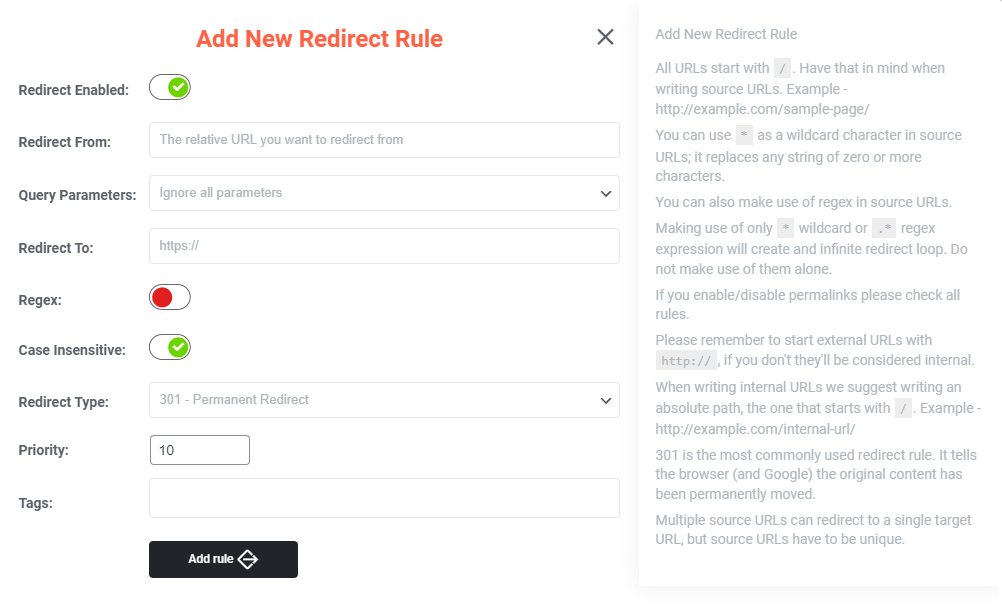

One way to consolidate similar pages is through 301 redirects which will guide users from duplicate content to a single authoritative URL so it can get more visibility in search results. This will not only prevent link equity from being spread across multiple pages but also simplify the user journey.

To help you consolidate these pages, use the WP 301 Redirect plugin to effectively redirect visitors to your desired page instead of the duplicate one. Only do this if you plan on deleting the duplicate page from your site.

This way, whatever authority the duplicate page has will be transferred and combined with the page you want people to visit.

URL Parameters

Also URL parameters generated by tracking or sorting functions can cause confusion to both users and search engines. Handling these parameters requires careful thought and implementation of strategies such as using canonical tags and interlinking to the desired page on your site and not the ones with URL parameters.

Internal Linking and Site Architecture

A well structured hierarchy will allow users to navigate the website easily and find relevant content with minimal effort. This can be achieved by having clear categories and subcategories and make sure all important content is within 3 clicks from the homepage.

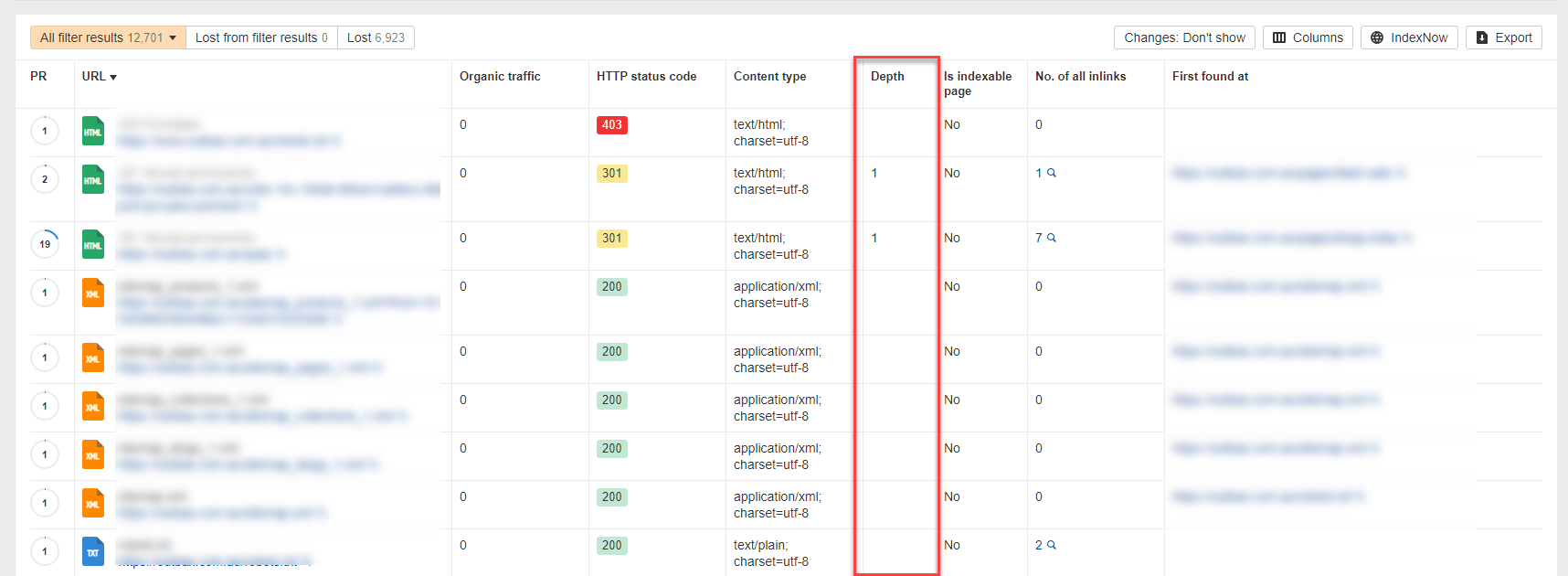

You can trace the crawl depth of the pages on Ahrefs by going to Tools > Page Explorer and checking the Depth column.

From here, begin looking at pages with a crawl depth of 3 and below and find ways how to decrease the depth and make them more crawlable for search spiders.

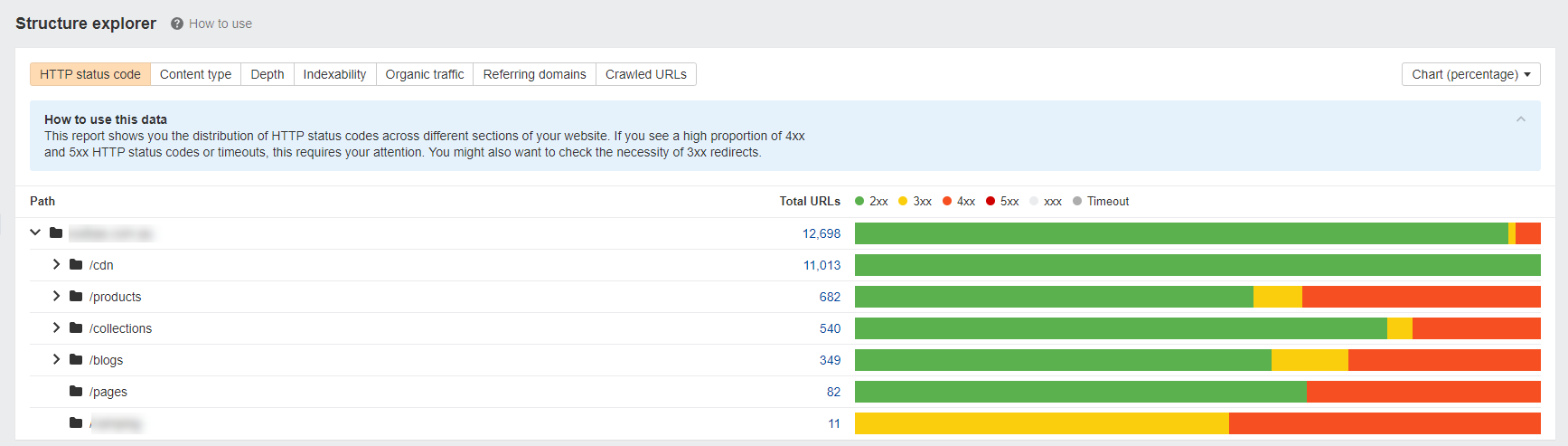

You can also check Ahrefs' Structure Explorer to see the distribution of pages and their different status codes, depth, indexability, and more across various folders of the domain's web hosting.

For example, the navigation menu should be simple with logical placement of internal and external links that match user intent. By reviewing this structure you can identify potential barriers to user engagement and fix it and improve dwell time and reduce bounce rates.

Redirect Chains

Redirect chains are sequences of URLs that lead to multiple redirects before reaching the final destination. These chains can cause unnecessary delays, which can cause users to abandon their search and potentially hurt the site’s search engine ranking.

A technical SEO audit should aim to identify these redirect chains and simplify them into a single redirect wherever possible. This can be done by updating links and removing redundant paths and will result to a smoother user journey and faster load time both of which are good for SEO.

For starters, you need to identify which indexed pages on your site are redirecting to a specific page on your site. Check the HTTP Status Code column on Ahrefs' Page explorer in the audit results and see if it has a 301 code, which means the page redirects to another.

You can also enable the redirect chain URL to show in the columns and help you delve deeper into the pages.

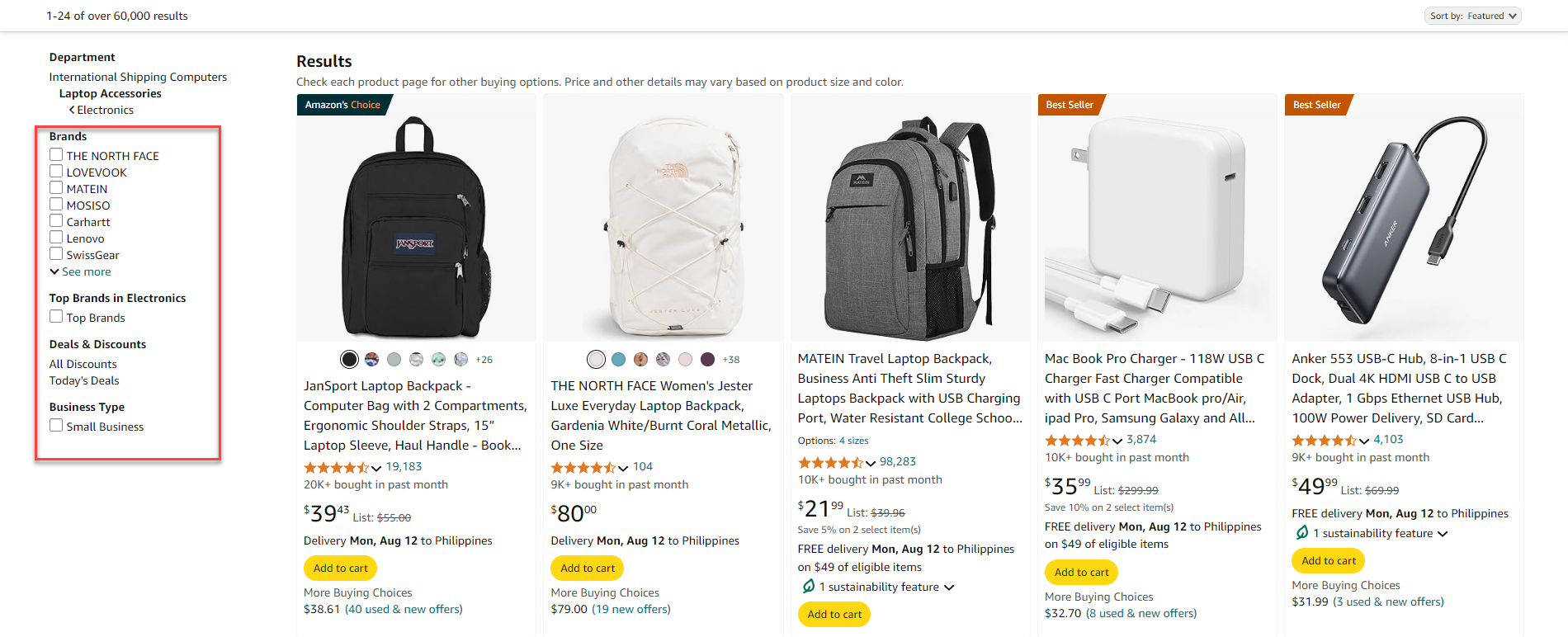

Faceted Navigation

Also faceted navigation must be optimized for eCommerce sites where users filter products by attributes like size, colour or price.

Implementing faceted navigation for SEO success requires a strategic approach that balances user experience with search engine optimization. The process begins with prioritizing facets based on user behavior and keyword research, ensuring that the most valuable combinations are easily accessible to both users and search engines.

A well-structured URL system is crucial, as it helps search engines understand your site's hierarchy and facilitates the implementation of SEO controls. Effective use of canonical tags is essential to manage duplicate content issues, while robots meta tags and directives provide granular control over how search engines interact with your faceted pages.

Pagination best practices, such as using rel="next" and rel="prev" tags, are important for sites with large product catalogues, helping search engines efficiently crawl and index paginated series. Throughout the implementation process, it's vital to monitor and adjust your strategy based on crawl stats, organic traffic, rankings, and user behaviour metrics.

Internal Linking Analysis

Broken Links

Broken internal links also known as 404 errors are another issue that will surface during a technical SEO audit. These errors will frustrate users by leading them to pages that no longer exist and disrupt their experience and the credibility of the website.

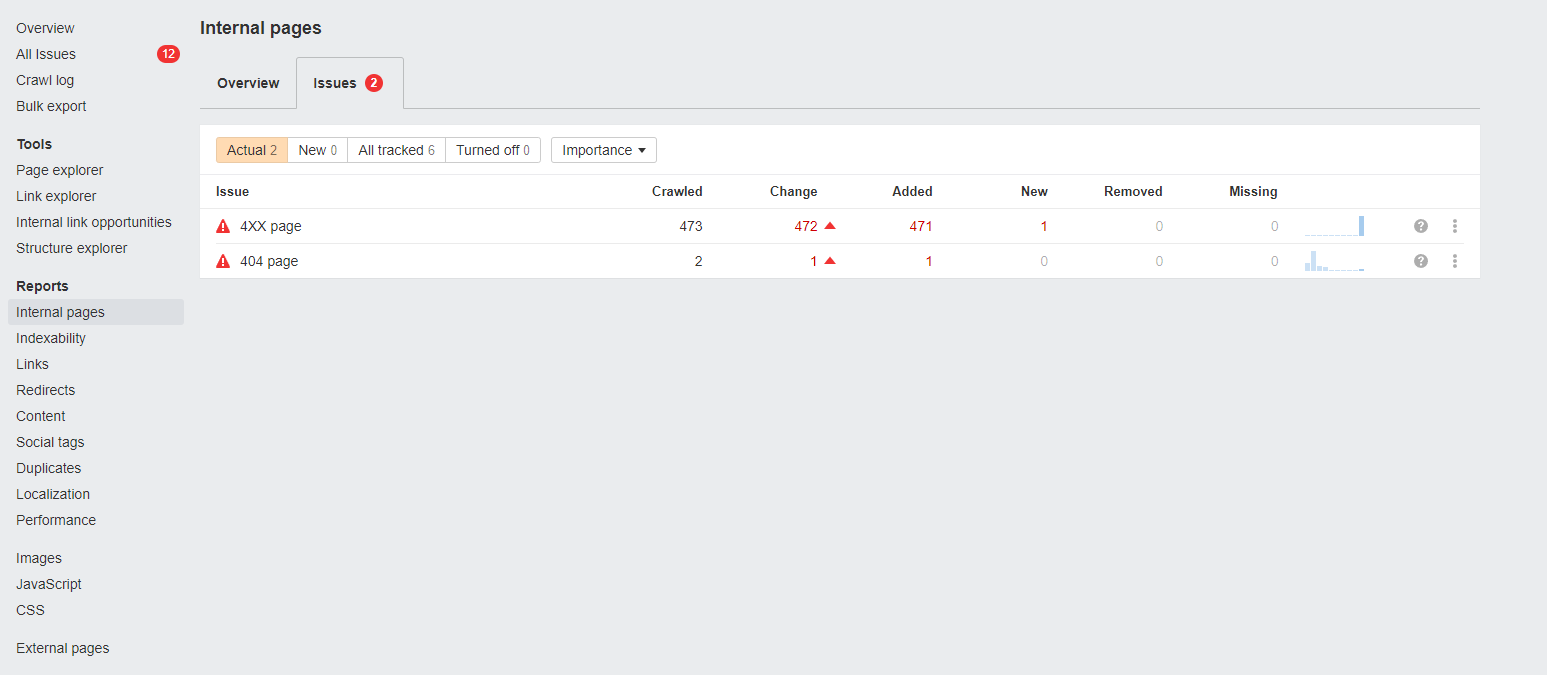

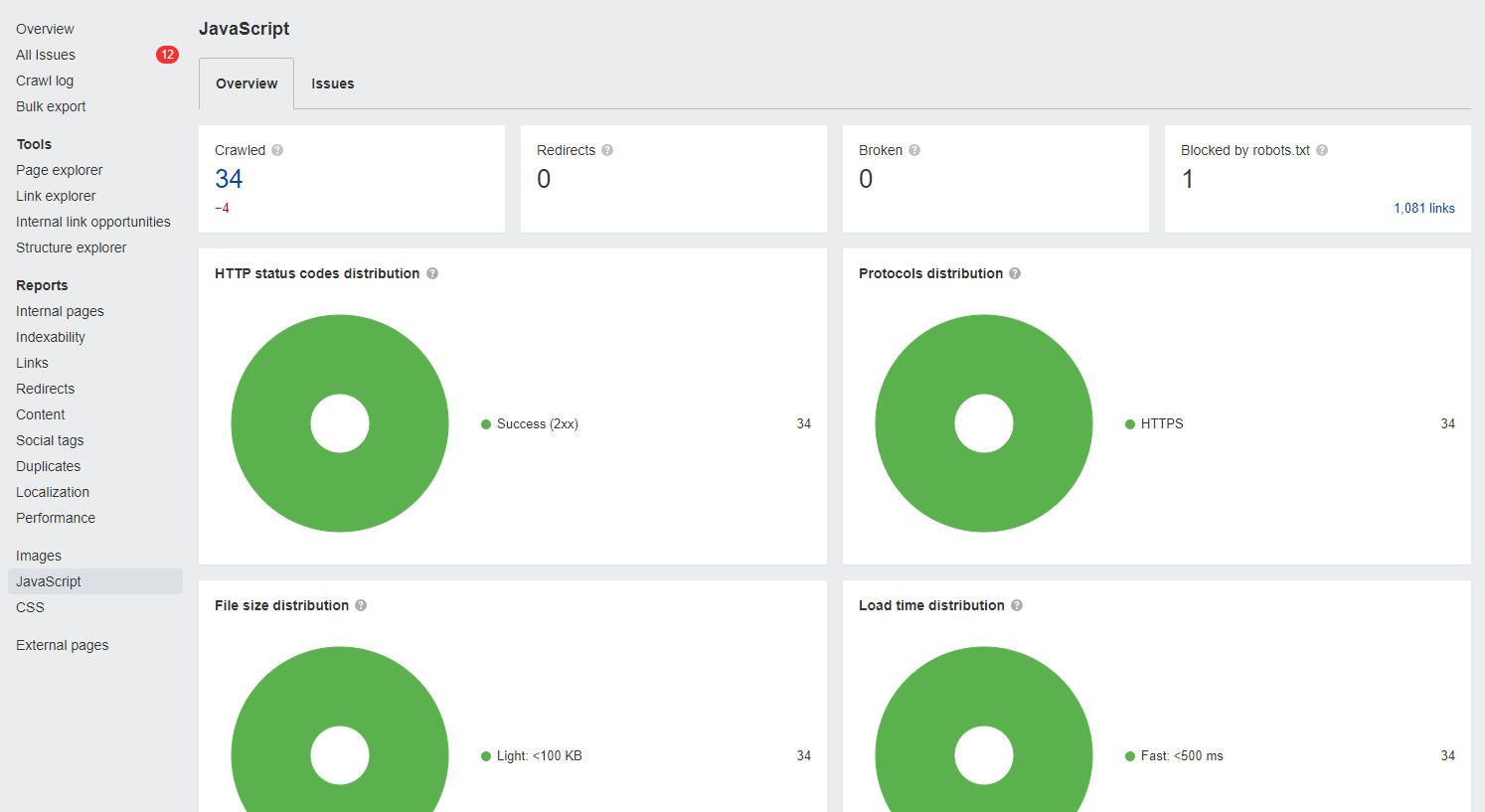

Finding these broken links can be done using Ahrefs. Click on Reports > Internal pages and go to the Issues tab to pinpoint the potential problems regarding your internal pages.

Regarding 4XX pages, look for pages with 404 status codes in the report.

Another way to look for broken links is to click on Reports > Links to see the Broken internal pages.

Once found, it must be fixed by updating the link to point to the correct page or by routing them through proper 301 redirects.

This proactive link management will not only improve user experience but also allow search engines to crawl the site properly and make sure all content is indexed correctly.

Orphan Pages

Lastly orphan pages – pages that don’t have internal links pointing to them – must also be addressed during the audit process. These pages are hidden from users and search engines and can limit their visibility and SEO potential.

Find these pages by going to Tools > Page explorer and organising the pages in the table to show the lowest number of all inlinks at the top.

By linking these pages to the site’s navigation and contextually linking them from related content, their visibility can be increased, and organic traffic to those pages will improve.

Technical On-Page Factors

Hreflang

Hreflang is a microdata specification that tells search engines which language and regional version of a webpage to serve to users in different locations.

A correct setup of hreflang attributes will not only improve user experience by showing visitors content in their preferred language but also prevent duplicate content issues that can arise when multiple versions of the same page exists.

The Tools > Localization page on Ahrefs Webmaster Tools should show potential issues your site has regarding this factor.

During the audit, make sure to check if hreflang tags are pointing to the correct pages and that there are no errors in the language or country codes used.

Schema Markup

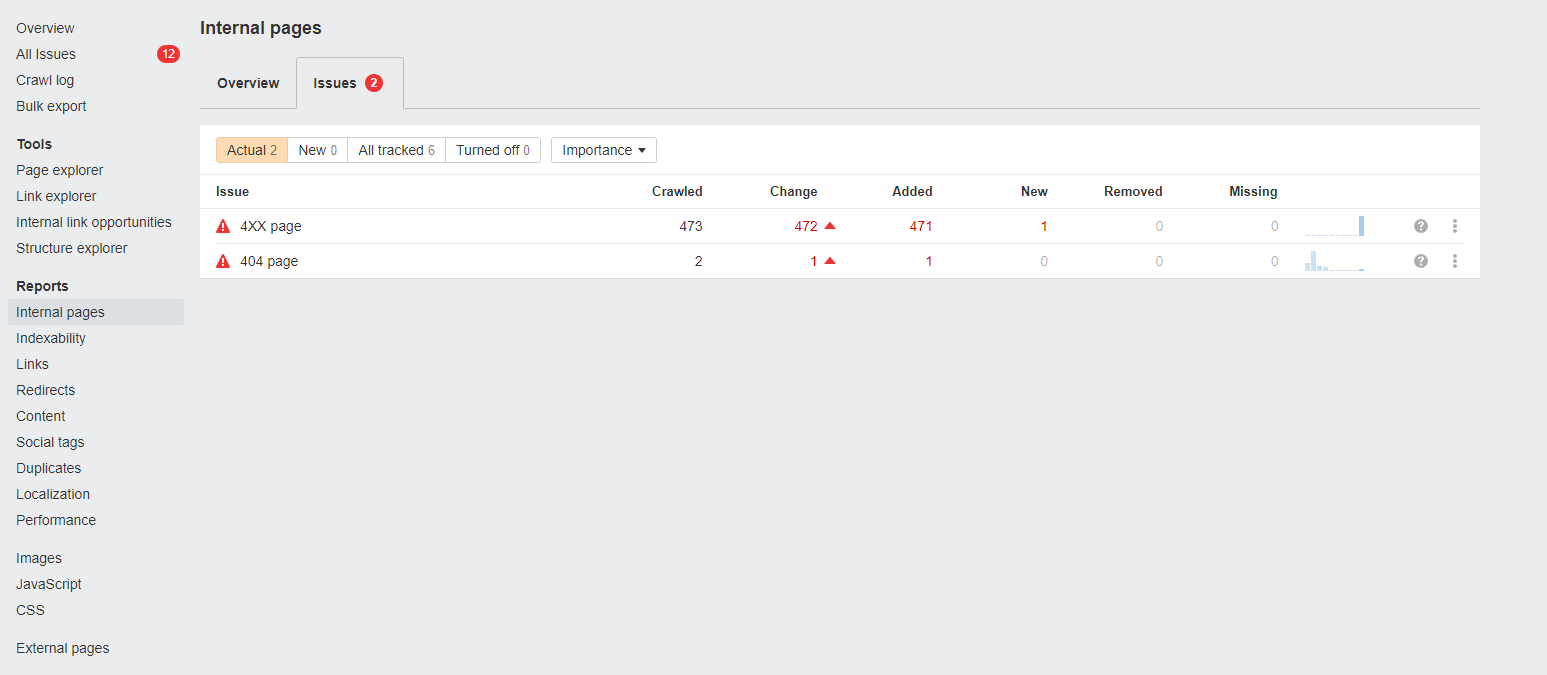

Another thing to check in your technical SEO audit is schema markup, which can boost your site’s visibility in search results. It’s a structured data vocabulary that allows search engines to understand your content better and show enriched results like review stars, events or product availability.

Yoast SEO lets you choose the schema markup for the page or post you're creating and generate one for the page.

If you want complete control over how your schema markup would look like, use TechnicalSEO.co,'s Schema Markup Generator to manually generate one for your page. Choose the type of markup you want for the page and input the information yourself.

Copy the code and paste it inside the page's <head> section.

Make sure to use the correct schema types for your content to make the most out of this feature. The audit should check for existing schema markup, identify any errors or warnings in the implementation and see if there are more schema types that can be applied.

This extra layer of information will result to higher click-through rates and better search engine rankings and ultimately more traffic to your site.

Javascript

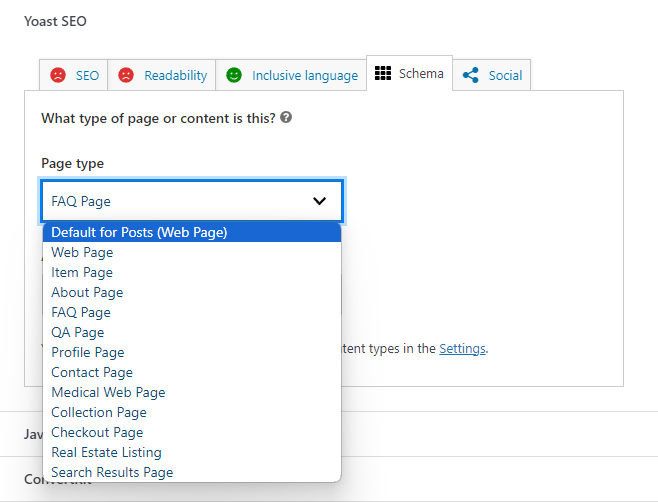

Javascript content handling is another area to check during a technical SEO audit. Search engines have improved in crawling and indexing of JavaScript heavy websites but proper implementation is still needed to make it fully functional.

If your site is heavily dependent on JavaScript frameworks make sure to check that the rendered content is accessible to search engine bots and there are no blocking issues in your robots.txt file. This also means that important content is not hidden behind user interactions like clicks or scrolls.

Go to the JavaScript page on AWT to monitor issues on your site regarding this factor.

Regular audits will help identify potential issues and website owners can adopt best practices in JavaScript implementation so that all users, human or crawler, can access the content.

Mobile Optimization

Mobile friendly testing is a must in any technical SEO audit as mobile internet usage is growing and surpassing desktop usage in many areas. These tests will give you valuable insights on your site’s mobile usability, identify issues that can hinder user experience or high bounce rates.

Responsive Design

When checking responsive design implementation make sure your site adapts perfectly to different screen sizes so it’s consistent across all devices. Responsive design involves using flexible grid layouts, images and CSS media queries to configure web content dynamically based on the device showing it.

A responsive site not only improves user experience but is also loved by search engines as it consolidates different URLs for mobile and desktop sites, optimizes indexing and ranking efforts. Make sure elements like images, buttons and navigation menus scale properly so elements don’t overlap or become inaccessible which can discourage users to engage with your content.

Content Parity

Content parity between mobile and desktop version of your site is key to provide equal access to information. This means all users whether they are using mobile or desktop can access the same high quality content.

Don’t show mobile users stripped back versions of your site or limited information so make sure to have a consistent narrative and overall user experience across both.

Also consider the formatting of your content. Mobile device readers prefer shorter paragraphs, bullet points and smaller images which can improve readability and engagement. Having rich snippets and structured data on both platforms will help preserve content integrity and search visibility.

Site Speed and Performance

Core Web Vitals

A technical SEO audit should check Core Web Vitals, a set of performance metrics that affect user experience and search engine rankings. Core Web Vitals has three key components:

-

Largest Contentful Paint (LCP) - measures loading time, specifically the time it takes for the largest element on the page to render. Ideal LCP is under 2.5 seconds. By checking this metric webmasters can identify which part of their site is causing slow loading and where to improve.

-

First Input Delay (FID) - measures a page's responsiveness by quantifying the delay between a user's first interaction and the browser's response, with an ideal score being under 100 milliseconds.

-

Cumulative Layout Shift (CLS) - assesses visual stability by measuring unexpected layout shifts during page loading, with a good score being less than 0.1, helping to ensure a smooth and frustration-free user experience.

Image Optimisation

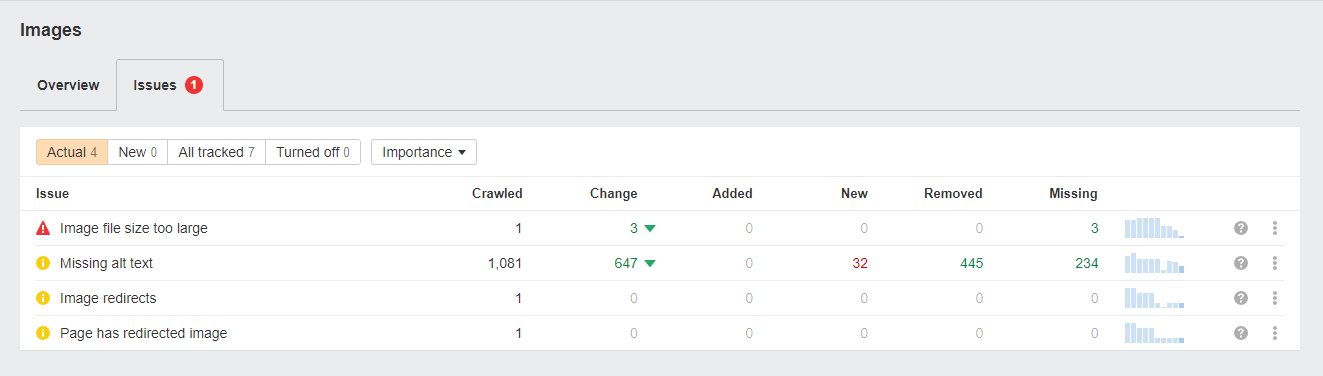

First off, you can monitor your site's image content issues from Ahrefs. It primarily lets you know if you have large image files that slow down a page's loading speed.

By using WebP and responsive images you can reduce file size without sacrificing quality. Also lazy loading where images are only loaded as they enter the viewport can improve initial loading time.

A plugin like ShortPixel can do the trick here. It not only compresses the images in bulk or before you upload them on your WordPress site, but it also converts them into WebP format to maximise how fast your site loads.

Along with image optimisation, text compression through Gzip or Brotli will ensure text files are delivered faster and reduce data transfer bytes.

Server Response Times

Slow server response can greatly impact a site’s speed which means poor user experience. This involves reviewing server configurations, making sure resources are allocated properly and identifying areas that can be optimised.

The assessment should also check browser caching settings which helps improve loading time for repeat visitors. By using cache controls properly you can reduce the need for servers to serve the same assets multiple times.

Content Delivery Network (CDN)

CDNs stores copy of your site in different locations around the world and users can download data from the server nearest to them. This reduces latency and improves overall load time and gives users a seamless and efficient browsing experience.

If you haven't yet, you can sign up for a free Cloudflare account and use it as your site's CDN. It solves most of the issues has regarding content deliverability without spending a dime.

Security and HTTPS

In technical SEO audits, proper HTTPS implementation is a basic requirement that not only boost your site’s search engine rankings but also user trust and security. HTTPS stands for HyperText Transfer Protocol Secure, it uses encryption to secure the data between the user’s browser and your web server. This is especially important for sites that handle sensitive information such as personal data, payment information and login credentials.

To check if HTTPS is set up properly you should check for a valid SSL (Secure Sockets Layer) certificate which is required to establish secure connection. There are tools and browser extensions that can help you check if your site is fully secured and also identify mixed content issues where some resources are loaded over HTTP instead of HTTPS.

HTTP Strict Transport Security

Next in the security spectrum is HTTP Strict Transport Security (HSTS) which is a crucial component to secure your HTTPS connection. HSTS is a web security policy mechanism that protects websites from man-in-the-middle attacks such as cookie hijacking and protocol downgrade attacks. By enabling HSTS you’re telling browsers to enforce HTTPS and disallow all HTTP requests so an unsecured connection is not possible.

To check if HSTS is in place you can view the HTTP response headers; look for the “Strict-Transport-Security” header. Along with proper implementation, you should also regularly review and update your HSTS policy to ensure it’s in line with the latest web security guidelines.

Malware and Vulnerability Scan

Scanning your site for malware and security vulnerabilities is as important to give your users an optimal and secure browsing experience.

Malware can compromise sensitive data and also drop your search engine rankings as search engines prioritise safe and secure browsing environment. So using robust security tools and services will help you identify malware infections, outdated software or misconfigurations that hackers can exploit.

Software Updates

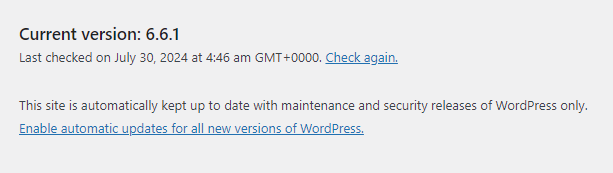

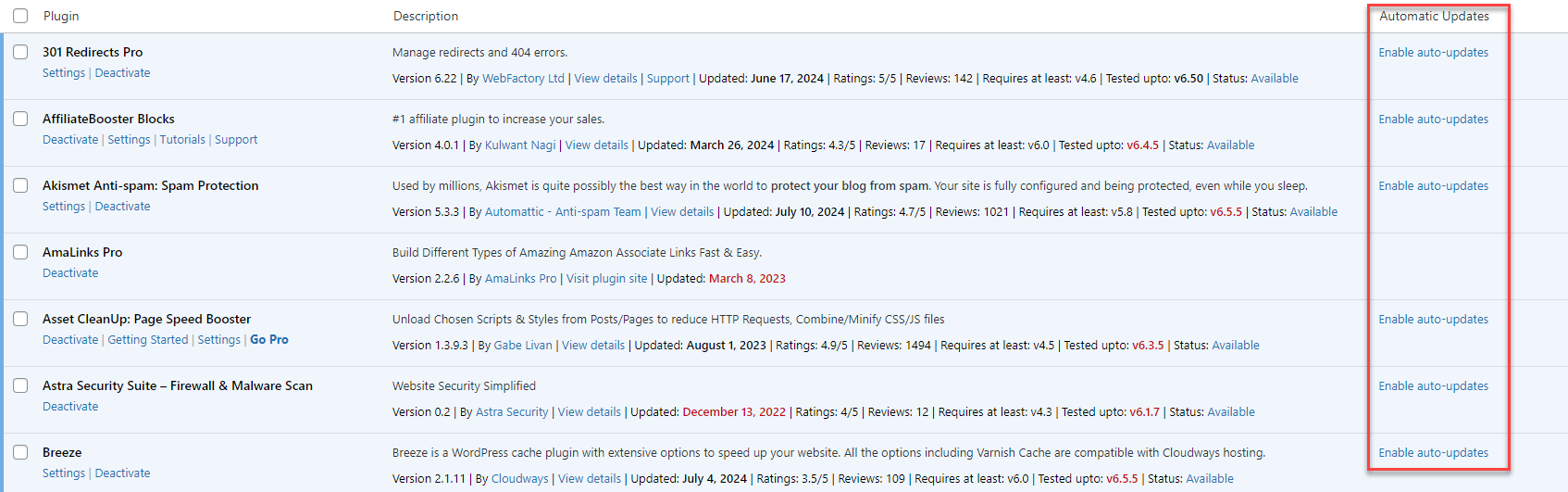

Also, keeping your content management system (CMS) and plugins up to date is a big help in prevention.

Software updates often comes with security patches that fixes known vulnerabilities so your site’s overall security posture is reinforced.

To keep your site's CMS and tools updated, in particular WordPress, you can set them to automatically update whenever a new version is available. Go to Dashboard > Updates to enable auto-updates for WordPress core:

You can do the same for plugins.

Multimedia Optimization

We've already discussed optimising your images for technical SEO, but it's not the only content type you must maximise for search engines to improve their crawlability and indexability,

In particular, applying video SEO best practices can boost multimedia visibility in search engines. Videos add content and can engage users deeper, so implementing schema markup can give search engines more information about the video content.

As part of this, creating video descriptions that are keyword rich and including transcripts can boost relevance and usability. Also hosting videos on external platforms like YouTube can also give you additional backlinks and referral traffic to your site.

Lastly, to make sure your multimedia content is fully indexed, create and submit image and video sitemaps. These special sitemaps will make it easier for search engines to find and index your multimedia content and get you more visibility in search results.

Start by collecting essential information about your videos, including titles, descriptions, URLs, and other relevant details. Then, structure this information in XML format according to the video sitemap protocol, with each video entry enclosed in <video> tags within a <url> element.

Below is a format you can follow:

<?xml version="1.0" encoding="UTF-8"?>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9"

xmlns:video="http://www.google.com/schemas/sitemap-video/1.1">

<url>

<loc>http://www.example.com/videos/some-video-title</loc>

<video:video>

<video:thumbnail_loc>http://www.example.com/thumbs/123.jpg</video:thumbnail_loc>

<video:title>Awesome Video Title</video:title>

<video:description>This is a description of my awesome video.</video:description>

<video:content_loc>http://www.example.com/video123.mp4</video:content_loc>

<video:player_loc>http://www.example.com/videoplayer.php?video=123</video:player_loc>

<video:duration>600</video:duration>

<video:publication_date>2023-07-30T14:00:00+00:00</video:publication_date>

</video:video>

</url>

</urlset>This structured format helps search engines understand and index your video content more effectively.

Once you've created your video sitemap, save and submit the sitemap to search engines through their webmaster tools, such as Google Search Console. It's important to keep your video sitemap up to date, reflecting any new videos or changes to existing ones.

By maintaining a comprehensive video sitemap, you provide search engines with valuable metadata about your video content, potentially improving your videos' visibility in search results and driving more traffic to your site.

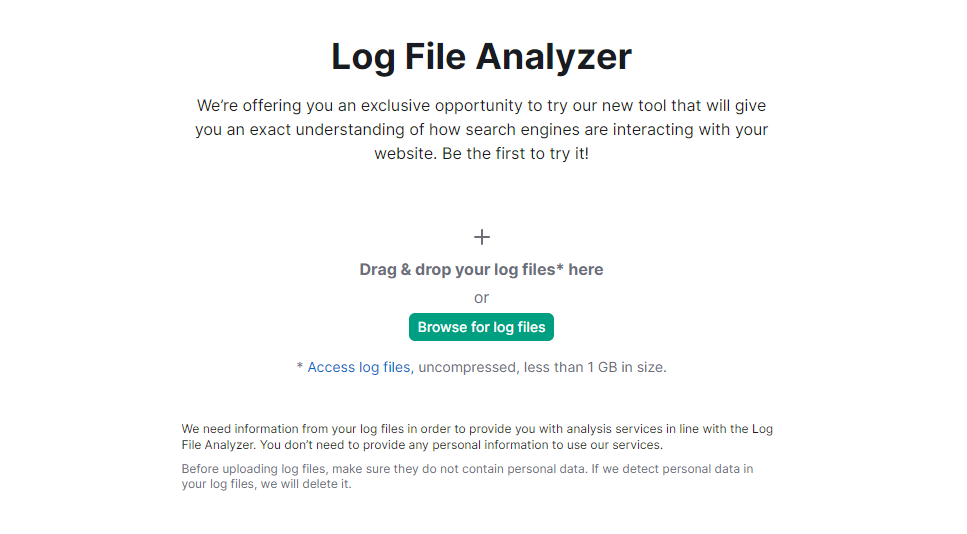

Log File Analysis

Analyse server log files using a URL inspection tool to see crawl behaviour. Identify crawl errors and inefficiencies to optimise your site. Conducting a technical SEO audit is a must for any site to improve visibility and user experience.

Server log files capture every request made to the server including those from search engine crawlers like Googlebot. By digging into these logs, webmasters can see how search engines interact with their site.

This analysis can help you identify crawl patterns including the frequency and timing of the crawl requests which can tell you if your pages are being indexed or if certain areas are being neglected.

Besides understanding crawl behaviour, examining server logs can also help you pinpoint specific crawl errors and inefficiencies that are hindering your site performance.

Ahrefs unfortunately can't analyse your site's log file, but Semrush can. First, download the log file from your hosting provider and upload the file to Semrush so it can audit it properly.

Optimising your site based on log file analysis goes beyond fixing the immediate issues. It’s about understanding user behaviour and adjusting your site architecture to be more accessible.

Common issues are 404 errors, redirects, and server errors (5xx). Identifying these problem areas is important because if search engine crawlers encounter big obstacles when trying to access your content, it may lead to poor indexing and lower search rankings.

Post-Audit Actions

Post-audit actions is where you turn the insights from your technical SEO audit into real results. Implementing the right fixes and strategies will make your website optimisation to result to better performance and search visibility.

Prioritise and Implement Fixes

Prioritise issues based on their impact to your website. Start with the critical ones like site speed and mobile optimisation, as these affect user experience and search rankings. Use analytics data to identify the biggest drop off points and target those first.

Implement changes systematically, focus on high impact areas like broken links, irrelevant redirects or incorrect tag usage. Consider using a task management tool to track which issues to tackle first and check them off as you fix them so you won’t miss anything.

Check and Improve

Check regularly to see the impact of your implemented fixes. Track key performance indicators like organic traffic, bounce rates and conversion rates through Google Analytics.

Set up monthly reports to visualise the trends and adjustments over time so you can refine further. Check indexation status and crawl errors in Google Search Console to make sure search engines are digesting your content correctly.

An iterative approach will keep your site optimised and responsive to user behaviour and algorithm changes.

Audit Regularly

Audit regularly to keep your site healthy and ensure that all search engines, including Google and other search engines, can crawl and index your website.

Monthly or quarterly audits will help you catch emerging issues before they become big problems. These check-ins will allow you to check the ongoing effectiveness of your SEO strategies.

Consider using automated tools to scan your site regularly for new errors and to stay aligned with best practices. Regular reviews will not only improve your SEO strategy but also keep you updated with industry changes so your site will remain competitive in search.

Summary

Spending time on a technical SEO audit will definitely improve your website’s performance and visibility. With the right tools and resources you can identify and fix the issues that’s holding your site back. Regular audits will keep you ahead of the competition and keep your online presence healthy.

Focus on the critical areas like site speed and mobile optimisation and track your progress with key performance indicators.

With the right strategies in place you’ll not only improve your search engine rankings but also user experience and engagement.

To help you out in your technical SEO initiative, choose from and book any of Charles' SEO audits. His expertise and insights from years of auditing small and big sites from various industries uncover technical problems not even the most experienced SEO can spot.

Schedule an audit with him to get started!